I finally added translations to my Lisp web app \o/

I wanted to do it with gettext and Djula templates. There seemed to

be some support for this, but it turned out… not

straightforward. After two failed attempts, I decided to offer a

little 90 USD bounty for the task (I announced it on the project’s

issues and on Discord, watch them out for future bounties ;) ).

@fstamour took the challenge and is the person I’ll be eternally grateful for :D He kindly set up everything, answered my questions and traced down annoying bugs. BTW, I recommend you have a look at his ongoing breeze project (towards refactoring tools for CL) and local-gitlab.

Many thanks go as usual to @mmontone for incorporating changes to Djula after our feedback. Here’s Djula documentation:

Djula’s gettext backend is based of the rotatef/gettext library. It worked fine. I left some feedback there anyways.

Why gettext

GNU gettext is the canonical tool to bring translations to software projects. Using it ensures we have access to its range of localization features and it unlocks the possibility to use modern web-based translation tools (like Weblate), according you have the pretention to have external translators for your project.

I looked at other Lisp libraries.

an i18n library. Load translations from GNU gettext text or binary files or from its native format. Localisation helpers of plural forms.

It may ship improvements uppon gettext, but @fstamour ultimately chose gettext over it:

I ended up with so much less code with gettext than with cl-i18n and I found gettext’s code much easier to read if the documentation was lacking.

(BTW, @cage has been really helpful in answering many questions, hello o/ ) He explained:

Seems that the library you pointed out does not support any files but MO (binary) files. cl-18n can parse a couple more of formats like its own and include an extractor for translatable strings in source files, so can be used without any of the gettext toolchain. But they address the same problem in more or less the same way. :)

- translate also is not gettext-compatible, it has a function to find missing translations, it got a Djula backend last April. Look, it is this easy to add a backend:

;; translation-translate.lisp

(in-package :djula)

(defmethod backend-translate ((backend (eql :translate)) string language &rest args)

(apply #'translate:translate string language args))

- cl-locale, a “Simple i18n library for Common Lisp”, works with hand-written dictionaries, it also is not gettext-compatible, it has a Djula backend but it has no tool to collect all the translatable strings.

Djula is a very nice templating library that works with HTML templates, much like Django templates. It has support for 2 translation backends, although I found it hard to start with. It should be better now, but you’re welcome to improve things further.

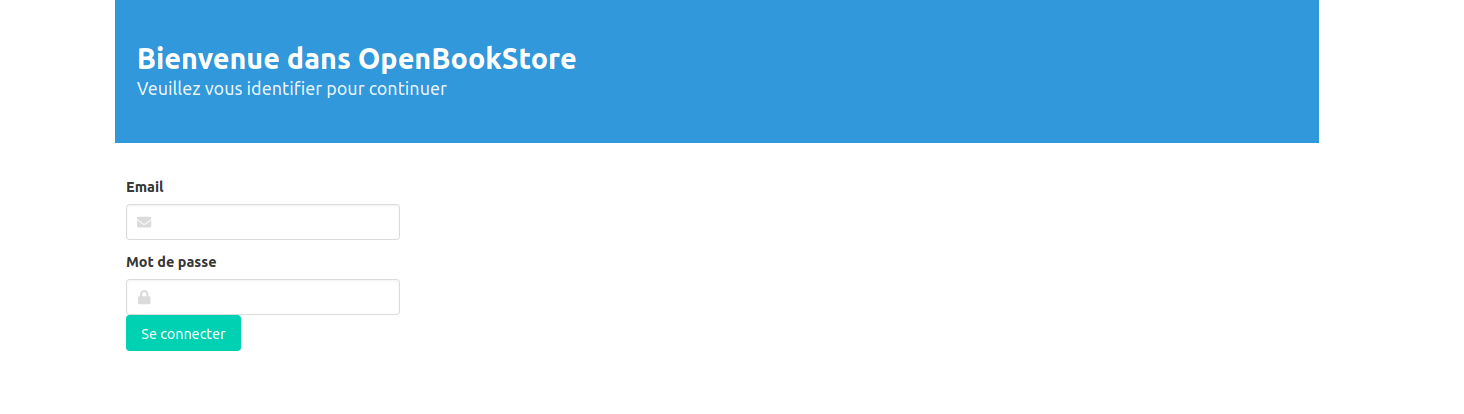

To translate a string in a template, we enclose it between {_ _} marks like so:

<p> {_ "Please login to continue" _} </p>

We will setup what’s necessary to collect those strings and handle them with gettext.

Extracting strings from .lisp source files

We need to extract strings from .lisp source files and from HTML templates.

xgettext already allows to collect strings for a lot of

languages. It understands the Lisp syntax, we only need to tell it

what is the marker used to mark strings to translate. We will use the

underscore function, as it is the convention for many languages out

there:

(_ "welcome")

We have to setup the gettext library:

(setf (gettext:textdomain) "bookshops")

;; ^^ a meaningful name for gettext's catalogue.

(gettext:setup-gettext #.*package* "bookshops")

This creates new functions under the hood in the current package:

(defmacro setup-gettext (package default-domain)

(setf package (find-package package))

(check-type default-domain string)

`(progn

(defun ,(intern "GETTEXT" package) (msgid &optional domain category locale)

(gettext* msgid (or domain ,default-domain) category locale))

(defun ,(intern "_" package) (msgid &optional domain category locale)

(gettext* msgid (or domain ,default-domain) category locale))

(defun ,(intern "NGETTEXT" package) (msgid1 msgid2 n &optional domain category locale)

(ngettext* msgid1 msgid2 n (or domain ,default-domain) category locale))

(defun ,(intern "N_" package) (msgid)

msgid)

(defun ,(intern "CATALOG-META" package) (&optional domain category locale)

(catalog-meta* (or domain ,default-domain) category locale))))

So yes, it creates the _ function. It does this in a macro so that

the function will populate our catalogue by default. You can now export it:

(defpackage :bookshops.i18n

(:use :cl)

(:import-from :gettext #:*current-locale*)

(:export

#:_

#:n_

#:*current-locale*

#:list-loaded-locales

#:set-locale

#:with-locale

#:update-djula.pot)

(:documentation "Internationalisation utilities"))

We can now call xgettext:

xgettext --language=lisp --from-code=UTF-8 --keyword=_ --output=locale/ie.pot --sort-output

the --keyword argument (-K) tells it we are using the underscore. Hey, we also want to collect the N_ ones (for ngettext, it handles grammatical forms that depend on a number (typically, plurals)):

xgettext -k_ -kN_

OK, now we want to find all our .lisp sources and extract strings from them all. We’ll search them with a call to find . -iname "*.lisp" …. You have an example in Djula’s doc, here’s how we did (ahem, how Francis did) with a Makefile target:

# List .lisp files under our src/ directory, unless they contain a #

SRC := $(shell find src/ -name '*.lisp' -a ! -name '*\#*')

HTML := $(shell find src/ -name '*.html' -a ! -name '*\#*')

DEPS := $(SRC) $(HTML) bookshops.asd # and some more...

# list of supported locales

LOCALES := fr_fr

# Example of how the variable should look after adding a new locale:

# LOCALES := fr_FR en_GB

.PHONY: tr

tr: ${MO_FILES}

PO_TEMPLATE_DIR := locale/templates/LC_MESSAGES

PO_TEMPLATE := ${PO_TEMPLATE_DIR}/bookshops.pot

# Rule to extract translatable strings from SRC

${PO_TEMPLATE_DIR}/lisp.pot: $(SRC)

mkdir -p $(@D)

xgettext -k_ -kN_ --language=lisp -o $@ $^

# and then, come the rules to extract strings from HTML templates

# and build everything.

Extracting strings from HTML templates

Now, we need to fire a Lisp and call the Djula function that knows how to collect marked strings.

The Djula doc shows how to do it with djula:xgettext-templates:

sbcl --eval '(ql:quickload :my-project)'

--eval '(djula::xgettext-templates

:my-project-package

(asdf:system-relative-pathname :my-project "i18n/xgettext.lisp"))'

--quit

This function receives 2 arguments: your project package and the

output file, where to store results. It stores them in a .lisp file in

a regular gettext syntax, so this .lisp file is then read by a regular

xgettext command (looking for _ strings), and this command

ultimately creates the .pot file:

find src -iname "*.lisp" | xargs xgettext --from-code=UTF-8 --keyword=_ --output=i18n/my-project.pot --sort-output

We did it a bit differently with two other functions, in order to keep track of the source filename of each string (our source here):

#|

This could technically be just

(mapcan #'djula.locale:file-template-translate-strings

(djula:list-asdf-system-templates "bookshops" "src/web/templates"))

But I (fstamour) made it just a bit more complex in order to keep track of the source (just the

filename) of each translatable strings. Hence why the hash-table returned is named `locations`.

|#

(defun extract-translate-strings ()

"Extract all {_ ... _} string from the djula templates."

(loop

:with locations = (make-hash-table :test 'equal)

:for path :in (djula:list-asdf-system-templates "bookshops" "src/web/templates")

:for strings = (djula.locale:file-template-translate-strings path)

:do (loop :for string :in strings

:unless (gethash string locations)

:do (setf (gethash string locations) path))

:finally (return locations)))

(defun update-djula.pot ()

"Update djula.pot from *.html files."

(with-open-file (s (asdf:system-relative-pathname "bookshops" "locale/templates/LC_MESSAGES/djula.pot")

:direction :output

:if-exists :supersede

:if-does-not-exist :create)

(let* ((locations (extract-translate-strings))

(strings (alexandria:hash-table-keys locations)))

(loop

:for string :in strings

:for location = (gethash string locations)

:do

(format s "~%#: ~a~%#, lisp-format~%msgid ~s~%msgstr \"\" ~%"

(enough-namestring location (asdf:system-relative-pathname "bookshops" ""))

string)))))

So this is our Makefile:

# Rule to extract translatable strings from djula templates

${PO_TEMPLATE_DIR}/djula.pot: $(HTML) src/i18n.lisp

$(LISP) --non-interactive \

--eval '(ql:quickload "deploy")' \

--eval '(ql:quickload "cl+ssl")' \

--eval '(asdf:load-asd (truename "bookshops.asd"))' \

--eval '(push :djula-binary *features*)' \

--eval '(ql:quickload :bookshops)' \

--eval '(bookshops.i18n:update-djula.pot)'

# Rule to combine djula.pot and lisp.pot into bookshops.pot

${PO_TEMPLATE}: ${PO_TEMPLATE_DIR}/djula.pot ${PO_TEMPLATE_DIR}/lisp.pot

msgcat --use-first $^ > $@

# Rule to generate or update the .po files from the .pot file

locale/%/LC_MESSAGES/bookshops.po: ${PO_TEMPLATE}

mkdir -p $(@D)

[ -f $@ ] || msginit --locale=$* \

-i $< \

-o $@ \

&& msgmerge --update $@ $<

# Rule to create the .mo files from the .po files

locale/%/LC_MESSAGES/bookshops.mo: locale/%/LC_MESSAGES/bookshops.po

mkdir -p $(@D)

msgfmt -o $@ $<

Ultimately, this is the one make target we, as a developer, have to use:

.PHONY: tr

tr: ${MO_FILES}

Loading the translations

Once gettext is run and we added a couple translations, we have to load them inside our lisp app. We use gettext:preload-catalogs, as in:

;; Only preload the translations into the image if we're not deployed yet.

(unless (deploy:deployed-p)

(format *debug-io* "~%Reading all *.mo files...")

(gettext:preload-catalogs

;; Tell gettext where to find the .mo files

#.(asdf:system-relative-pathname :bookshops "locale/")))

This is a top-level instruction, we want it to work on our machine during development or when building the binary (situations where asdf will find the required directories), but not when we run the binary (the location wanted by asdf would not exist on another machine), and we can do this with the help of Deploy (Cookbook recipe).

The gettext hash-table is saved into the binary, we correctly find our translations when we deploy it.

During development

Set the current locale:

(defun set-locale (locale)

"Setf gettext:*current-locale* and djula:*current-language* if LOCALE seems valid."

;; It is valid to set the locale to nil.

(when (and locale

(not (member locale (list-loaded-locales)

:test 'string=)))

(error "Locale not valid or not available: ~s" locale))

(setf *current-locale* locale

djula:*current-language* locale))

(defmacro with-locale ((locale) &body body)

"Calls BODY with gettext:*current-locale* and djula:*current-language* set to LOCALE."

`(let (*current-locale*

djula:*current-language*)

(set-locale ,locale)

,@body))

BOOKSHOPS> (djula:set-locale "fr_fr") ;; <-- same as the ones declared in the Makefile

The change takes effect immediately.

However, run this when developping to reload the translations into gettext’s catalogue:

#+ (or)

(progn

;; Clear gettext's cache (it's a hash table)

(clrhash gettext::*catalog-cache*)

(gettext:preload-catalogs

;; Tell gettext where to find the .mo files

#.(asdf:system-relative-pathname :bookshops "locale/")))

I’ll add a file watcher to automatically reload them later, when I work more with the system.

and:

;; Run this to see the list of loaded message for a specific locale

#+ (or)

(gettext::catalog-messages

(gethash '("fr_fr" :LC_MESSAGES "bookshops") ;; yes, a list for the HT key.

gettext::*catalog-cache*))

and:

;; Test the translation of a string

#+ (or)

(with-locale ("fr_fr")

(_ "Please login to continue"))

Usage

From our readme:

make tr takes care of extracting the strings (generating .pot

files) and generating or updating (with msgmerge) .po and .mo

files for each locale. The .mo files are loaded in the lisp image at

compile-time (or run-time, when developing the application).

How to add a new locale?

- Add the new locale to the

LOCALESvariable in the makefile. - Call

make tr. This will generate the.pofile (and directory) for the new locale.

How to add a translation for an existing string?

- Update the

.pofile for the locale.- Find the

msgidthat corresponds to the string you want to translate. - Fill the

msgstr.

- Find the

- Call

make trto update the.mofile for the locale.

Another blog post I wish I had read a couple years ago o/

You are welcome to make everything even easier to use.

Happy lisping!